Episode Transcript

Transcripts are displayed as originally observed. Some content, including advertisements may have changed.

Use Ctrl + F to search

8:00

in your Apple calendar, it should be able to do that

8:02

and send the invite to someone. Yeah. So

8:04

now if you launch a call, you can press a

8:06

button on the top left that says record. It

8:09

does play like a little two to three second

8:11

chime that the other person will hear that says

8:13

this call is being recorded. But then once the

8:15

call is completed, it saves a transcript down in

8:18

your Apple Notes as well as some takeaways. I

8:20

think the quality is okay, but not great.

8:23

I would imagine they'll improve it over time.

8:25

But again, there's so many people and so

8:27

many apps now that have a lot of

8:29

users and make a lot of money from

8:32

things that seem small, like older people

8:34

who have doctor's appointments over the phone

8:36

and they need to record and transcribe

8:38

the calls for their relatives. That actually

8:40

does a shocking amount of volume. And

8:43

so I think this new update is showing

8:45

them maybe pushing towards some of those use

8:47

cases and allowing consumers to do that more

8:49

easily. Yeah, and maybe just to round this

8:51

out, this idea to both of your points,

8:54

there are so many third party developers who

8:56

have historically created these apps. I mean, you

8:58

mentioned the ability to detect a plant. If

9:00

you go on AppMagic or data.ai, you

9:03

can see there are pretty massive

9:06

apps. That's their single use case,

9:08

but it works. People need it. What

9:10

happens to those companies? What does it signal

9:12

in terms of Apple's decision

9:14

to capitalize on that and maybe less

9:17

so have this open ecosystem for third

9:19

parties? Yeah, I think it kind

9:21

of raises an interesting question about what is the

9:23

point of a consumer product often? Is it just

9:25

a utility, in which case Apple might be able

9:27

to replace it? Or does it become

9:30

a social product or a community? Say

9:32

there's a standalone plant app, and

9:35

then there's the Apple plant identifier. You

9:37

might stay on the standalone plant app

9:39

if you have uploaded all of these

9:41

photos of plants that you want to

9:43

store there. And now you have friends

9:45

around the country who like similar types

9:47

of plants, and they upload and comment.

9:50

It becomes like a straw for

9:52

plants type thing, which sounds ridiculous,

9:54

but there's massive communities of these

9:56

vertical social networks. And so

9:58

I think there's still like... huge opportunity for

10:01

independent consumer developers. The question is just

10:03

like, how do you move beyond being

10:05

a pure utility to keeping

10:07

people on the product for other things that

10:09

they can't get from like an Apple? Yeah,

10:11

I agree. I think putting my investor hat

10:14

on, this is not a case where I'm

10:16

necessarily worried for startups. I think what

10:18

Apple showed through this also is they're going to build

10:21

probably the simplest, most utility-oriented version of

10:23

these features. And they're not going to

10:25

do an extremely complex build

10:28

out with lots of workflows and lots of

10:30

customization. So yes, they might kill some

10:32

of these standalone apps that are being

10:34

run as cash flow generating side projects.

10:36

But I don't see them as much

10:38

of a real risk to some of

10:40

the deeper venture backed

10:42

companies that are maybe more ambitious in

10:44

the product scope. If

10:51

we think about utility, one of the ways that you

10:53

drive utility is through a better model. So maybe we

10:55

should talk about some of the new models that came

10:58

out this week. We'll pivot to OpenAI first. As

11:00

of today, as we're recording, they released

11:02

their new O1 models, which are

11:04

focused on multi-step reasoning instead of just answering

11:06

directly. In fact, I think the model even

11:09

says, like, I thought about this for 32

11:11

seconds. The article

11:13

they released said that the model

11:15

performed similarly to PhD students on

11:17

challenging benchmark tasks in physics, chemistry,

11:19

and biology, and that it excels

11:21

in math and coding. They even

11:23

said that a qualifying exam for

11:25

the International Mathematics Olympiad, GPT-40, so

11:27

the previous model, correctly solved only

11:29

13% of problems while

11:32

the reasoning model that they just released scored an 83%.

11:35

So it's a huge difference. And this is

11:37

something actually a lot of researchers have been

11:39

talking about, this next step. I

11:41

guess opening thoughts on the model and maybe

11:43

what it signifies, what you're seeing. Yeah, it's

11:45

a huge evolution. And it's been very

11:47

hyped for a long time. So I think people are

11:49

really excited to see it come out and perform well.

11:52

I think even beyond the really

11:55

complex physics, biology, chemistry stuff, we

11:58

could see the older models struggle. even

12:00

with basic reasoning. So we saw this with

12:02

the whole like how many R's are in

12:04

strawberry fiasco. And

12:07

I think what that essentially comes from is these

12:09

models are like next token predictors. And so they're

12:12

not necessarily like thinking logically about, oh, I'm

12:14

predicting that this should be the answer. But

12:16

like, if I actually think deeply about the

12:18

next step, should I check that there are

12:21

this many R's in strawberry? Is there like

12:23

another database I can search? What would a

12:25

human do to verify and validate whatever solution

12:27

they came up with to a problem? And

12:30

I think over the past, I don't

12:32

know, year, year and a half or so, researchers had

12:34

found that you could do

12:36

that decently well yourself through prompting.

12:39

Like when you asked a question

12:41

saying, think about this step by

12:43

step and explain your

12:46

reasoning. And the models would get

12:48

two different answers on like basic

12:50

questions than they would get if

12:52

you just asked the question. And so

12:54

I think it's really powerful to bring

12:56

that into the models themselves. So they're

12:58

like self reflective instead of requiring the

13:00

user to know how to prompt like

13:02

chain of thought reasoning. I agree. I

13:04

think it's really exciting actually for categories

13:07

like consumer ed tech, where actually a

13:09

huge in some months, like a majority

13:11

of chat GBT usage is by people

13:13

with the .edu email address or have

13:15

been using it to generate essays. But

13:17

historically, it's been pretty limited to writing

13:20

history, those kinds of things. Because as

13:22

you said, these models are just famously

13:25

bad at math and science and other

13:27

subjects that require maybe deeper and more

13:29

complex reasoning. And so a

13:31

lot of the products we've seen there, because

13:33

the models are limited, have been the take

13:35

a picture of my math homework and

13:38

go find the answer online, which is fine

13:40

and good. And a lot of those companies will make

13:43

a lot of money. But I

13:45

think we have an opportunity now to

13:47

build deeper ed tech products that change

13:49

how people actually learn because the models

13:51

are able to correctly reason through the

13:54

steps and explain them to the user.

13:57

And when you use it today, you can see

13:59

the steps it thought about something.

14:01

So by default it doesn't show you all

14:03

the steps, but if you want or need

14:05

to see the steps, like for a

14:08

learning process, you can get them. I

14:10

did test it out right before this with the

14:12

classic consulting question, how many golf balls can fit

14:14

in a 747? And 01, the new model, got

14:16

it completely correct

14:19

in 23 seconds. I tested it on

14:21

4.0, the old model, it was off by 2x, 3x.

14:25

Okay. And it took longer to generate, so

14:27

very small sample size, but promising early results

14:29

there. No, it's important. I think I saw

14:31

you tweet something about this recently, this Ed

14:33

Talk angle or slant on this. A lot

14:35

of people are up in arms saying this

14:37

technology is being used in classrooms, and

14:40

I think you had a really interesting take, which was

14:42

like, okay, this is actually pushing us to force

14:45

teachers to educate in a way where you

14:47

can't use this technology and you have to

14:49

think and develop reasoning. It's funny, I had

14:51

found a TikTok that was going viral that

14:53

showed there's all these new Chrome extensions for

14:55

students where you can attach it to Canvas

14:57

or whatever system you're using to take a

14:59

test or do homework, and you just screenshot

15:02

the question now directly and it pulls up

15:04

the answer and tells you it's A, B,

15:06

C, or D. And in

15:08

some ways it's like, okay, cheating, do you really

15:10

want to pay for your kid to go to

15:12

college to be doing that? But on the other

15:14

hand, before all of these models

15:16

and these tools, most kids were still

15:19

just googling those questions and picking multiple

15:21

choice. And you could argue

15:23

a multiple choice question for a lot of

15:25

subjects is probably not actually the best way

15:27

to encourage learning or to encourage

15:29

the type of learning that's actually going to

15:31

make them successful in life or to even

15:33

assess true understanding. Like when someone does a

15:35

multiple choice answer, you have no idea if

15:37

they guessed randomly, if they got to the

15:39

right answer, but had the wrong process and

15:41

they were lucky or if they actually knew

15:43

what they were doing. Yeah. And I

15:45

think the calculator comparison has been made

15:47

before in terms of AI's impact on

15:49

learning, but similar to the fact that

15:52

now that we have calculators, it took a while,

15:54

it took decades, but they teach kids math differently

15:56

and maybe focus on different things. And

15:59

they did pre-calculate. when it was all

16:01

by hand. I'm hoping and thinking the same

16:03

will happen with AI, where eventually the quality

16:06

of learning is improved. And

16:08

maybe because it's easier to cheat on the

16:10

things that are not as helpful for true

16:12

understanding. Right, and I mean, if we think

16:14

about, this just came out today, is

16:17

this a signal of what's to come

16:19

for all of the other models, or

16:21

at least the large foundational models, or

16:24

do you see some sort of separation in

16:26

the way different companies approach their models and

16:28

think about how they think per se? It's

16:31

a great question. I think we're

16:34

starting to see a little bit of

16:36

a divergence between general intelligence and emotional

16:38

intelligence. And so if you're

16:40

building a model that's generally intelligent,

16:43

and you maybe want it to

16:45

have the right answers to these

16:47

complex problems, whether it's physics, math,

16:49

logic, whatever, and I think folks

16:52

like OpenAI or Anthropic or Google

16:54

are probably focused on having these

16:56

strong general intelligence models. And so

16:58

they'll all probably implement similar things

17:00

and are likely doing so now. And

17:03

then there's sort of this newer branch of companies,

17:05

I would say, that are saying, hey, we don't

17:07

actually want the model that's the best in the

17:09

world at solving math problems or coding. We

17:12

are building a consumer app, or

17:14

we are building an enterprise customer

17:16

support agent or whatever, and we

17:18

want one that feels like talking

17:20

to a human and is truly

17:22

empathetic and can take on different

17:24

personalities and is more emotionally intelligent.

17:27

And so I think we're reaching this really

17:29

interesting branching off point where you have probably

17:32

most of the big labs focused on

17:34

general intelligence and other companies focused on

17:36

emotional intelligence and the longer tail of

17:38

those use cases. So interesting. Do we

17:40

have benchmarks for that? As in there's

17:42

obviously benchmarks for the, how does it

17:44

do on math? And because we're not

17:46

quite at the perfection in terms of

17:49

utility, that's what people are measuring, but

17:51

have you guys seen any sort of?

17:53

I haven't. So I feel like for

17:55

certain communities of consumers using it for

17:57

like therapy or companionship or whatever. If

18:00

you go on the subreddits for those products

18:02

or communities, you will find

18:04

users that have created their own really primitive

18:06

benchmarks of like, hey, I took these 10

18:08

models and I asked them all of these

18:10

questions and here's how I scored them. But

18:13

I don't think there's been like emotional intelligence

18:16

benchmarks at scale. A Redditor might create it.

18:18

I would not be surprised. Yes. Maybe

18:20

after listening to this, reach out definitely

18:23

if you're building that. I

18:29

think that also relates to the

18:31

idea that these models ultimately in

18:34

themselves aren't products. They're embedded in

18:36

products. I think Olivia, you

18:38

shared a Spotify day list tweet about how

18:40

that was just like a really great way

18:42

for an incumbent because all of the incumbents

18:45

aren't trying to embed these models in some

18:47

way. You said it was a really great

18:49

case study of how to do that well.

18:51

Yeah. So Spotify day list, we both

18:53

love. I'm going to bring up mine to see what it says.

18:55

This is a risky move. It is risky. I

18:58

never share my Spotify wrapped because basically

19:01

it's just an embarrassment. Well, but that's

19:03

okay. My emotional, gentle, wistful Thursday afternoon.

19:05

That's actually much better than it could

19:07

have been for you. Yeah. I

19:10

get a lot of screen. I say that lovingly. Yeah. So

19:12

basically what Spotify day list does is it's

19:15

a new feature in Spotify that kind of

19:17

analyzes all of your past listening behavior and

19:19

it curates a playlist based on the types

19:21

of music, emotionally, genre wise,

19:23

mood wise that you typically listen

19:25

to at that time. And

19:27

it makes three a day, I think, or four

19:29

a day by default. Yes. And

19:32

it switches out every six or so hours.

19:35

Exactly. And the feature was very

19:37

successful. So Spotify CEO tweeted recently, I think

19:39

it was something like 70% of users are

19:41

returning week over week,

19:44

which is a really, really good retention, especially since

19:46

it's like not easy to get to within Spotify.

19:48

Like you have to go to the search bar

19:51

and search day list. Mine is pinned now if

19:53

you click it enough. It's

19:56

not too right. Yeah. It's really

19:58

fun. And I think why it works.

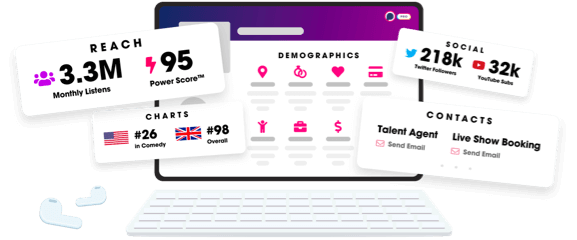

Unlock more with Podchaser Pro

- Audience Insights

- Contact Information

- Demographics

- Charts

- Sponsor History

- and More!

- Account

- Register

- Log In

- Find Friends

- Resources

- Help Center

- Blog

- API

Podchaser is the ultimate destination for podcast data, search, and discovery. Learn More

- © 2024 Podchaser, Inc.

- Privacy Policy

- Terms of Service

- Contact Us