Episode Transcript

Transcripts are displayed as originally observed. Some content, including advertisements may have changed.

Use Ctrl + F to search

0:01

The digital divide, we've been talking about that for 20,

0:03

30 years. It

0:05

is no longer a hardware issue

0:07

for a lot of America. In

0:11

your mind, do I believe that this stuff

0:13

is for me? Or

0:15

is it for someone else? Is

0:17

it for the white folks? Is it for the

0:19

rich folks? You look at some of these African-American

0:22

communities, Latino communities, overlooked,

0:24

underestimated. What if you gave

0:26

those communities the most creative

0:28

tools ever imagined

0:31

and told them, this is not a hand grenade,

0:33

this is a jet pack for you. But

0:36

that's what I'm saying, man. It's not a smart

0:38

issue, it's a hard issue. Bat

0:45

Van Jones, political commentator for

0:47

CNN, author and entrepreneur. His

0:50

latest initiative called Dream Machine

0:52

focuses on opening up AI

0:54

access for underrepresented communities. I

0:57

wanted to talk to Van in the

0:59

shadow of the Democratic National Convention about

1:02

how the tech world and the political

1:04

world are interacting in a highly charged

1:06

moment. We discuss how Kamala Harris's view

1:08

of AI may differ from Joe Biden's

1:11

and how she's managed to turn herself

1:13

from what he calls cringy into

1:15

a Beyonce-like star. We

1:18

also talked about the evolution of

1:20

Elon Musk from Andrew Yang supporter

1:22

to Donald Trump supporter and key

1:24

areas that Van believes are being

1:26

neglected in the campaign maelstrom. He

1:29

doesn't hold any punches, flunts, skeptical,

1:31

even inspirational connecting today's moment to

1:33

Dr. Martin Luther King Jr. In

1:36

short, it's a fun and worthwhile ride,

1:38

so let's get to it. I'm

1:41

Bob Safian and this

1:43

is Rapid Response. I'm

1:50

Bob Safian and I'm here with Van

1:52

Jones. Van, thanks for joining us. I'm

1:55

glad to be here, brother. You've been busy,

1:57

as always, especially with the presidential race.

2:00

dramatically shifting over the last month. Oh,

2:02

did something happen? Yeah, did you hear

2:04

that there's nonsense going on? Listen, I've

2:07

been on television now for 12 years.

2:10

I helped to cover the 2012 election, the 2016

2:12

election, 2020, and now 2024. And

2:17

so I've seen good debate performances, bad wins.

2:19

I was on set when Obama, you know,

2:21

crapped the bed, but I've never seen anything

2:23

like that. And then we

2:25

come out and they go right

2:27

to my panel. And I was like, I don't know what

2:29

I'm going to say. The guy was the first person

2:32

to read the handwriting on the walls

2:34

and not pretend that it was something

2:37

that it wasn't. You were

2:39

saying what everybody was thinking, right? It

2:41

really is crazy, Bob. You

2:44

think about these stories, like the Emperor's New

2:46

Clothes and that type of stuff. Nobody would

2:48

possibly pretend that an emperor had

2:50

on a beautiful robe if he's actually butt

2:52

naked. And it's like, well, nobody would actually

2:55

pretend that the president of the United States

2:57

is ready to go for five more years

3:00

in office when he's not. And then suddenly

3:02

ding, he's out and ding, he's for Kamala.

3:04

And ding, Kamala goes from being cringe to

3:06

being cool in 24 hours. She

3:09

goes from being like, you know, this kind

3:11

of like, do we have a vice president?

3:14

Where is she to like Beyonce in a

3:16

week? And it's like, no, so this is

3:18

completely different, sir, than any other

3:21

thing I'm about to cover. And in

3:23

the meantime, while you're doing this, you're

3:27

also launching a new initiative, this

3:29

Dream Machine Innovation Lab to address

3:32

the impact of AI on underrepresented communities.

3:34

Like, is there a connection for you

3:36

between what's going on in the political world and

3:38

what's going on in the tech world? There's

3:41

a big connection because if you're

3:44

waiting for politics and politicians to fix

3:46

this stuff, you're gonna be waiting

3:49

for a long time. I

3:51

think in the last century, you

3:53

could be excused for

3:56

having a more state-oriented, government-oriented

3:58

view of change. because

4:00

in the last century, you

4:02

had politicians, political figures, Churchill,

4:04

JFK, FDR, Dr. King. These

4:09

were political people who were either

4:11

politicians or protestors trying to make

4:14

governments work better, try

4:16

to get the new deal done. So,

4:18

you know, working families have some support,

4:20

trying to get civil rights and women

4:22

rights done so that we really all

4:25

can be seen as created equal. These

4:27

are all the tasks of government. What

4:31

political figure is

4:34

there on the world stage right now that

4:36

you think is up to the task of

4:38

even being a good dog catcher or city

4:41

council member? I mean, it is compared

4:43

to the challenges that we're facing, catastrophic

4:46

global warming, the rise

4:49

of all these authoritarian governments, United

4:51

States kind of falling all over

4:53

itself and in fighting and the

4:56

political class is not up to this fight.

4:59

Meanwhile, who are the people

5:01

really shaping tomorrow? It's

5:03

not the politicians, it's the technologists. The

5:06

technologists are the ones who are basically

5:09

creating a new human

5:12

civilization right before our eyes.

5:15

Look, I've got, you know, for my first marriage,

5:17

I got two kids, one in college, one in

5:19

high school, but I've also, in my new relationship,

5:21

I've got two little babies, two and a half

5:23

years old and six months old. By

5:26

the time they're my age, they

5:28

will be living in a different human

5:31

civilization. Their first crushes or

5:34

best friends might be AIs. When

5:36

it's time for them to have kids, they

5:38

might use biotech tools

5:41

to design their kids. They

5:43

might be buried on the moon or on

5:45

Mars. All of that is different than where

5:47

I grew up. I was born in 1968.

5:50

This is all Jetson stuff. And

5:53

yet that's what's being created.

5:55

Now, my local Congress

5:57

person has very little leverage on that.

5:59

I'm sorry, but there's

6:01

about 10,000 people in the

6:03

AI community. They're gonna have

6:05

a much bigger impact on all of

6:08

that than any 10,000 politicians. And

6:11

so that's why Will.i.am and I got together

6:13

and said, let's

6:15

launch a campaign to try to

6:18

get the next generation of young

6:20

minds, especially from black and brown

6:22

and other overlooked communities, get

6:24

them focused on AI. So

6:26

we have a campaign called Make Wakanda

6:29

Real, trying to

6:32

excite the imagination of young people.

6:34

What if you could use all

6:36

these technology tools to solve problems,

6:38

Wakanda being the, you know, incredibly

6:40

technologically advanced super nation in

6:42

the superhero universe of Marvel. What

6:45

if you could make that real? And

6:48

so it's a vote of confidence in the

6:50

future in that we

6:52

think that technology can be used for good. If

6:55

the driving force of creating this

6:57

future is tech, like black

7:00

representation in tech, black

7:03

people make up maybe 8% of

7:05

in tech employees and like 3% of

7:09

tech C-suite executives. I mean, it's

7:11

not, you know, the creators of

7:13

this future don't necessarily represent all

7:15

of us, right? It's

7:17

bad, it's bad. And it's dangerous.

7:20

It's dangerous. It's not, oh,

7:22

it's terrible for the poor black people,

7:24

though it is. Oh, it's terrible

7:26

for the native Americans and

7:29

whatever, though it is. It's terrible

7:31

for everybody. The last time we let

7:33

one little group determine

7:36

human civilization, we had

7:38

400 years of slavery,

7:41

colonialism, environmental destruction. You don't

7:43

want that. You want

7:45

the next time you build a civilization

7:47

to include everybody, because you

7:50

want a new human civilization that

7:53

is more in harmony with itself and the

7:55

planet that we call home. And

7:57

that can't be created by one little group of

7:59

people. I've got to be co-authored by a lot

8:01

of people. But I'll tell you this, this

8:04

will get me in trouble with some of my liberal friends. At

8:06

least at the consumer level, we

8:09

call it the digital divide. We've been talking

8:12

about that for 20, 30 years. It

8:14

is no longer a hardware issue for

8:16

a lot of America. I'm

8:19

not saying you don't have some deserts. You

8:22

do have some housing projects. You do have

8:24

some neighborhoods that are still not online. You

8:27

got to deal with that. But

8:29

increasingly, it's no longer a hardware

8:31

problem. Most people have a smartphone.

8:34

They could download chat GPT. They

8:37

could download mid-journey. It's

8:39

not so much a hardware problem. It's a

8:42

wetware problem. It's in

8:44

your mind, in your brain, in

8:47

between these two ears, do I

8:49

believe that this stuff is for me? Do

8:52

I believe that this technology is

8:54

supposed to be in my hands? Or

8:57

is it for someone else? Is

8:59

it for the white folks? Is it for the rich folks? That's

9:02

a wetware problem. Is it

9:04

corny or is it

9:06

cool? That's a wetware problem. And

9:08

so we're trying to have a campaign

9:10

that says, you look

9:12

at some of these African-American communities, Latino

9:15

communities, Native American, overlooked,

9:17

underestimated, Appalachian, you've

9:19

got creative people. You've

9:22

got resilient people. You've got grit.

9:24

You've got determination. You've got innovative

9:26

people. What if you

9:28

gave those communities the most creative tools

9:31

ever imagined and

9:33

told them, this is not a hand grenade. This

9:36

is a jet pack for you. You don't

9:38

have to go to four years of college and then

9:40

go beg somebody for a bunch of capital. A

9:43

lot of what you're doing now is you're

9:45

replacing capital with code. But that group, they

9:47

have to believe it, right? They have to

9:49

believe it to grab that. That's what I'm

9:51

saying, man. It's not a

9:53

smart issue. It's a hard issue. You

9:56

got a lot of smart people, but do they have that

9:58

self belief? Do they have that self confidence? So

10:00

much of the discussion about

10:03

AI and underrepresented communities has

10:05

been about like that AI

10:07

is developed, you know, like not

10:09

in a particularly inclusive way or AI regulation

10:11

and bias. And it doesn't sound like that's

10:13

necessarily what you're obsessed with. Not that you

10:16

don't think maybe that should be addressed, but

10:18

that it's more about just getting folks in

10:20

those communities to feel like, yeah, this is

10:22

something that's cool for me to make part

10:24

of my life. I'm glad

10:26

you pointed this out because Dr. King,

10:30

you know, my great hero, he

10:33

would have been very concerned about everything you

10:35

just said. He would have stood up and

10:37

said, we want equal protection from

10:39

racially biased algorithms.

10:42

We want equal protection from racist

10:45

robots. We want equal protection from

10:47

job displacement, but he wouldn't stop

10:49

there. He also

10:51

would have wanted equal opportunity, equal access to

10:53

the good stuff. See, that's what's

10:55

been missing is, you know, Dr. King

10:58

didn't say, I have a critique. You

11:00

know what I mean? Like I have a set

11:02

of concerns. That's not the

11:04

thing, you know? It's the,

11:06

he had a dream. He had a

11:09

vision. He had, and I

11:11

think you gotta be on both sides of

11:13

the conversation. Equal protection

11:16

from bad stuff, but equal

11:18

opportunity, equal access, equal excitement,

11:20

equal cultural momentum on the

11:22

good stuff. Because look at the

11:24

black community when it comes to technology. Yes,

11:26

you can say we don't participate in Silicon Valley and

11:29

we definitely don't, and we need to do more. And

11:31

yet, we

11:33

took two turntables and

11:36

a microphone in

11:38

the Bronx and created

11:40

hip hop, which

11:43

is now bigger than any other musical

11:45

genre. That was technology.

11:49

Kids literally would hack streetlights to be

11:51

able to have these block parties with

11:53

two turntables and a microphone. And somebody

11:55

would get on and say, what a

11:57

hip, a hipity hip. And suddenly you

11:59

had hip hop. and it is

12:01

now the dominant cultural force in the

12:03

world. So if you could do that

12:06

with that little bit of technology,

12:08

what does that same community do

12:10

with Mid-Journey, with ChatGP2,

12:13

with Sora? We have

12:15

no idea what could come. And

12:17

so I just wanna make sure that somebody's on

12:19

the opportunity side of this thing. I'm

12:21

curious, how do you educate

12:24

yourself about AI? Like, do you spend

12:26

a lot of time playing with these different tools?

12:29

Yeah, though I suck, man. It's

12:32

a different mindset, prompt thing, like

12:37

trying to ask this goddamn thing the right

12:39

questions. And I'm not, I

12:41

think a different kind of a brain is

12:44

gonna figure out that dance that

12:46

really is a tango. You gotta learn

12:48

the steps, right? Yeah, you gotta learn

12:50

the steps. I took an MIT course

12:53

on machine learning online. It

12:55

was horrible for my self-esteem.

13:00

I'm used to doing pretty good in classroom

13:03

settings. It was like trying to learn a

13:06

foreign language in a

13:08

foreign language, but it showed

13:10

me there's nothing to be

13:12

scared of. It's just

13:14

another tool. It can get out

13:16

of hand, sure, but I mean, everything

13:18

else we invented can get out of hand. Bio

13:21

weapons, nuclear weapons, social

13:24

media. I mean, anything can get out

13:26

of hand, but there are

13:28

people who if they had a laptop and

13:31

they could get online and they had a

13:33

couple extra hours in the evenings

13:35

instead of watching TikTok

13:38

all day, could

13:40

create beautiful stuff, could

13:43

solve really important problems, could

13:45

become incredibly valuable people. And

13:48

I just want that to at least be

13:50

an option, Bob, because look,

13:52

you know why you have never

13:54

ever in your life gone to

13:56

McDonald's and ordered sushi. It'd

14:00

be nasty, it'd be horrible. You'd probably get

14:02

sick. It'd be nasty, yeah. Exactly, that's it.

14:04

But also, also, also,

14:07

sushi is not on the menu. It's

14:09

not on the menu. So

14:12

you never order something that's not on

14:14

the menu. I'm just trying to put

14:16

the positive part of AI

14:18

on the menu for

14:21

overlooked, underestimated communities. And I believe that some

14:23

of those folks are gonna do stuff that

14:25

blows everybody's minds. Van

14:28

has a way of keeping it real,

14:30

doesn't he? Let's hope AI access is

14:33

more enticing than McDonald's sushi. But I

14:35

get what Van's saying here, that if

14:37

we don't make the dream of AI

14:39

available across varied

14:41

communities, that we'll narrow the

14:43

possibilities for the future for all of us.

14:46

I also agree with Van that technologists

14:48

may have a bigger impact on the

14:50

future than governments, which makes

14:53

tech access that much more important.

14:55

After the break, we'll dig into

14:58

the tech politics crossover more, including

15:00

why Kamala Harris's embrace of AI

15:02

may differ from Joe Biden's, and

15:04

why Elon Musk's recent actions have

15:06

Van longing for quote, the old

15:09

Elon. So stay with us. Our

15:18

10-year anniversary as a company was coming

15:20

up and I said,

15:22

you know, I really want to do something big.

15:26

We settled on the idea that we were going to

15:28

take a grand vacation together.

15:31

That's Capital One business customer and

15:33

Pinnacle Company's founder, Chris Renner. At

15:36

the time, we had about 23 employees

15:40

and we chose to

15:42

invite them and their significant

15:45

others to a

15:47

tropical vacation to Mexico.

15:50

Everyone honestly thought we were

15:52

crazy. It

15:54

was 10 years and it was time to

15:56

celebrate as a team. We had survived.

15:59

the first few years of

16:02

every small business, the uncertainty of are you

16:04

going to make it or not. So

16:06

we planned this amazing trip

16:10

and we ended up at the

16:12

little beach side restaurant with

16:15

margaritas in hand and

16:18

toes in sand and

16:20

the sun was setting. It

16:23

was magical just to be there

16:25

together. Using his Capital

16:27

One VentureX business card, Chris was able

16:29

to apply his travel rewards to fund

16:31

his first company trip, which has become

16:34

an annual tradition. To

16:36

learn more, go to capitalone.com/business

16:38

card benefits. Before

16:41

the break, CNN's Van Jones explained

16:43

why he's pushing for wider AI

16:45

access through a new venture called

16:47

Dream Machine. Now Van digs

16:49

into the politics of AI, why

16:52

Harris may approach tech differently than Biden

16:54

and why this may be the most

16:56

momentous year since 1968. Let's

16:59

dive back in. Earlier

17:04

this year, Reid Hoffman came on

17:06

my show after he'd met with

17:08

Joe Biden about AI and Reid

17:10

shared some of what

17:12

Biden's perspective on emerging tech is.

17:14

But when I asked Reid, he

17:17

doesn't really know how Harris

17:19

thinks about AI. Do

17:22

you have any clear sense of that from

17:24

her personally, from her team? I'll say two

17:26

things about it. One is I don't know.

17:28

I've known the vice president for 25 years.

17:32

We came up together in San Francisco

17:34

politics. First of all, if you had told me

17:36

even a month ago that she would be

17:38

more powerful than Taylor Swift, I

17:41

would have said, in what universe is that

17:43

possible? And I don't know what she thinks

17:45

about this in particular. What I do know

17:47

is that she's from

17:49

the Bay Area and

17:52

Joe Biden is not. So

17:55

she grew up next door to Silicon Valley. She's

17:58

a different generation. A

18:00

lot of her donors and supporters,

18:02

her entire career have been Silicon

18:04

Valley. And so Kamala knows

18:07

a bunch of people in Silicon Valley. She's

18:09

known them for a long time. I

18:11

can't help but imagine that that won't have a

18:13

big impact on how she thinks about this stuff,

18:16

as opposed to lunch bucket

18:18

Joe Biden from another century on another

18:20

coast. You've been part of a bunch

18:23

of different enterprises addressing criminal justice reform,

18:26

clean energy. They haven't been particularly

18:29

central in this campaign. It seems more

18:31

like it's focused on law and order

18:33

and economic growth. Does

18:35

that frustrate you? You know, I

18:37

understand the waves and the

18:40

currents and the motions and stuff like that. And so

18:42

sometimes your issue is hot and sometimes it's not. Unfortunately,

18:45

the issues become so polarized that

18:48

if you're a Democrat, you're talking about climate change,

18:50

you're wasting your time because anybody who agrees with

18:52

you on that's gonna vote for you anyway, and

18:55

you're just gonna piss off people in the middle who might not

18:58

know which way to go. And no

19:00

Republican can own the issue.

19:02

I remember when this issue was more of

19:04

a dumb versus smart issue than a left

19:06

versus right issue. But for

19:09

me, look, I wish we were talking about

19:11

much deeper issues in general. I think we have a

19:13

real spiritual crisis

19:15

in the country. People are hurting, man.

19:18

I think people feel lost. I

19:20

think people feel very, very

19:23

concerned, afraid about the future. I

19:27

think people feel increasingly anxious

19:29

about other people. Like

19:32

I don't think the word neighbor means the same thing that it did

19:34

when I was growing up. I mean, half the time you

19:36

all know who the hell your neighbor is. Maybe you

19:38

got some app, you know, looking out, making sure,

19:40

you know, the neighborhood doesn't have some bad person

19:42

in it. That's not the same. The

19:46

world's changing, man. I mean,

19:48

China, Russia, Iran,

19:51

North Korea, they've got

19:53

our number. And they're

19:55

messing with us. If

19:58

these authoritarians win, the

20:00

next quarter of the century, some of the stuff we take

20:02

for granted can go away. I'd rather

20:04

talk about that in those terms, not talk

20:06

about that in terms of, well, you're a

20:08

dummy, you're a dummy, but we need to

20:10

come together as a country about some of

20:13

this stuff. And instead, everything

20:15

gets to be divisive, right? Like,

20:17

I mean, in the last few

20:19

weeks, being a parent, you know,

20:21

entered the conversation with

20:23

J.D. Vance, critiquing Kamala Harris's identity

20:26

as a step-parent. I mean, you

20:28

mentioned your children, and I know

20:30

you have, your two younger children

20:33

are in this dynamic called conscious

20:35

co-parenting. Like, has

20:37

your personal experience

20:39

of parenting impacted how

20:42

you look at the conversations about

20:44

parenthood in politics? A little

20:46

bit, but I mean, even if I didn't have

20:48

this kind of, you know, very modern family arrangement

20:50

with no Emmy, that

20:52

people don't know, I have a

20:55

friend, and after COVID, we decided to have two

20:57

kids together, and people thought that

20:59

was really bizarre. But,

21:01

you know, we call it conscious

21:03

co-parenting, and it has its ups and

21:06

downs, I wouldn't recommend it for everybody. But

21:09

if you have a friend, and somebody you trust,

21:11

and somebody you like, having kids

21:14

is a lot like having a small business. And,

21:18

you know, it's a lot of supply

21:21

and demand, and income,

21:23

and expenses, and project

21:25

management, and a lot

21:27

of HR, and so, if there's somebody that

21:30

you would start a small business with that

21:32

might be a decent enough person to start

21:34

a modern family with, and that's really been

21:36

our experience. But I just think

21:38

that J.D. Vance, you know, his

21:40

insults against women who don't have kids are

21:43

really meaningless, trivial compared to

21:46

his insistence that women do

21:48

have kids that don't want

21:50

to. His insistence that women

21:53

have kids is much, much worse than his

21:55

ridicule for women who don't have kids. For

21:58

this presidential. There have

22:00

been strong words on both sides

22:02

about the repercussions if the

22:04

other candidate is elected. What

22:07

do you think's at stake in this election? I

22:10

really do think you have very

22:13

stark differences between these

22:15

candidates. There are always

22:17

are differences, but I think

22:19

the direction of society is at

22:22

stake. If the very

22:24

worst potential in Donald

22:26

Trump would come out in his

22:28

next presidency, I don't wanna find

22:30

out because if it did,

22:33

he's already tested and strained American

22:36

institutions to the point of breaking.

22:39

The worst of Kamala Harris would

22:41

be more aid and

22:43

comfort to really obnoxious people on the left

22:46

who think they're better than everybody and

22:48

talk down to people. That

22:51

strikes me as survivable. The

22:53

worst that comes from Kamala Harris is maybe

22:57

too much government spending, maybe a

23:00

tax policy that punishes innovation

23:02

in a way, at least in the eyes

23:04

of the innovators and entrepreneurs. That

23:06

sucks, but you can then hire

23:09

somebody else to be president four years at survivable.

23:12

The worst from Donald Trump, I

23:14

don't know, that's 50 years to

23:16

fix, 20 years, 100 years. I

23:18

mean, how do you fix that stuff? So I think

23:21

it's very, very consequential. The

23:23

state of the electorate is

23:25

part of what makes me

23:28

so anxious that there are so

23:30

many people who are adamantly

23:33

believed that one

23:35

candidate is a crook and

23:39

you can find lots of people on both

23:41

sides who will make that argument about the

23:44

other candidate. And I guess I

23:46

was hoping that business

23:49

leaders would sort

23:51

of take up the middle and kind of

23:53

mend some of that, but it seems like

23:55

that's kind of subsided and business folks are

23:57

just like, yeah, I don't wanna piss anybody

23:59

out. I think that the business

24:02

community, when Biden was clearly

24:04

incapable of running and certainly

24:07

incapable of serving, and

24:11

the bite of taxes at the very, very

24:13

top created

24:15

a permission structure for a lot of

24:17

people in the business community to move in

24:20

directions that are just very scary to me. I

24:22

mean, listen, Elon Musk, right? He

24:24

was an Andrew Yang supporter in 2020. So

24:28

four years ago, Elon Musk was

24:30

supporting an innovation first Democrat.

24:34

And now he's clearly to the right

24:36

of Trump. And I think

24:39

imagines himself maybe being some kind of an oligarch

24:43

in some more authoritarian

24:45

country. That is terrifying

24:48

to me. I like Elon Musk

24:50

when he's trying to figure out how to get

24:52

to space and how to make clean

24:55

cars and how to

24:57

get innovation in government going with Andrew

24:59

Yang. That's a massive

25:01

asset to humanity. I like

25:03

to see a lot more of the

25:05

old Elon Musk. Well, power sometimes does

25:07

strange things to people, right? I'd like

25:09

to find out. If I get

25:11

some more power, I'll let you know how it goes. But in the

25:13

meantime, I just get it. I

25:17

get to talk about people who do have it. When

25:19

you think about where we're headed for the rest of 2024,

25:21

are you optimistic? This

25:24

is a year that people are gonna talk about like 68. You

25:28

had a new technology that was showing young

25:31

people things that they had never seen before

25:33

and getting them all riled up, color television.

25:36

You had an unpopular Democratic

25:38

incumbent, Johnson, who didn't decide to

25:40

go through with the election. You had a guy

25:42

named Bobby Kennedy jump into the race in 68.

25:45

You've got a guy named son, Bobby Kennedy,

25:47

jumping to the race this year. You've

25:50

got the Democratic convention in Chicago. I mean, it's

25:52

literally like a glitch in the matrix. But

25:55

to me, what I take away from it is

25:58

a lot more is possible. than we think. Kamala Harris

26:00

is 59, she's almost 60 years old

26:03

and she's just now coming into ourselves, which

26:06

means anybody in America can

26:08

be that un-pop kernel

26:11

that pops into a completely unexpected

26:14

form. And no

26:16

matter how bad things look for your company, for

26:18

your health, for your family, for your democracy, tomorrow's

26:21

a new day. You have no idea what's coming. So

26:24

much changed in a month. Like what's

26:27

gonna change in the next month? Who

26:29

knows, who knows? Expect

26:32

the unexpected, the debate

26:34

between Vice President Harris and Donald Trump.

26:37

Don't assume that your person is gonna

26:39

win because both

26:42

of them have done well and poorly in

26:45

debate situations. I say all

26:47

that because it's true and also I'm trying to keep our

26:49

ratings up. So

26:52

we'll see. This has been great, really,

26:54

I appreciate it. All right, to be continued, thank you,

26:56

brother. I'm gonna

26:58

get it. 2024 is

27:00

shaping up to be a really significant year

27:03

in US history. It's an opportunity for us

27:05

to dig deep, to try to understand where

27:07

the spirit of this country is broken and

27:09

how best to fix it. Modern

27:12

politics can feel like rearranging

27:14

deck chairs or alternatively a

27:16

WWE wrestling event. But

27:19

it's important for business leaders and technologists

27:21

in this audience to think about what

27:23

it means to care for your neighbors,

27:25

why so many people feel uneasy about

27:28

the future and how to expand the

27:30

dream of America, as Van Jones

27:32

puts it. While we're watching

27:34

the next debate on the edge of our

27:36

seats about what Kamala Harris or Donald Trump

27:38

might say or do, we

27:40

should also think about what we're gonna do. What

27:43

folks like Van Cian, CNN or

27:46

Fox, matters for the moment,

27:48

but what matters most is

27:50

how we're judged by future generations

27:52

and future stakeholders. Let's

27:54

hope we can keep our eye on the ball. I'm

27:57

Bob Sathian, thanks for listening.

28:08

One of the customers tweeted, they got

28:11

an insight that said, why don't you

28:13

try adding a quiz module onto your

28:15

website? It became one of the most

28:17

interacted module on their site. It was

28:19

so cool to watch. I

28:21

was in Vegas about to go on

28:24

stage to talk about the redesign of

28:26

Clarity. And I saw this tweet

28:28

and it gave me a little pep to my step. We

28:31

put in a lot of effort to make sure

28:33

we are customer focused. I

28:35

am Priyanka Vaid, a product lead

28:37

at Microsoft Clarity. And I'm

28:39

Kiraz Vaisal. I'm a software

28:41

engineering manager for Clarity Frontend. Priyanka

28:44

and Kiraz are members of the

28:46

team behind Microsoft Clarity, a free

28:49

tool to analyze user behavior online,

28:51

delivering data and insights. Our

28:53

customers were saying, why don't you have

28:55

this as a mobile app solution? It

28:58

was a big investment, making it

29:00

really visual, really easy, super structured,

29:03

so that anyone who wants to

29:05

dice and slice the data, they

29:07

are able to get those insights

29:09

for app experiences. Clarity

29:11

is not only focused on customer experience,

29:14

it's focused on a wide range of

29:16

customers. We want to make sure

29:18

that mom and pop shops, you know, a single

29:21

blogger can come to Clarity, get

29:23

insights and make improvements on their

29:26

sites. It may seem small,

29:28

but to even get those insights, they're amazed

29:30

that we are trying to make their lives

29:32

easy. To learn more,

29:34

go to clarity.microsoft.com. Rapid

29:38

Response is a Wait What original. I'm

29:40

Bob Safian. Our executive producer is

29:43

Yves Tro. Our producer

29:45

is Alex Morris. Assistant producer

29:47

is Masha Makutonina. Mixing

29:49

and mastering by Aaron Bastagnelli. Theme

29:52

music by Ryan Holiday. Our head

29:54

of podcasts is Lital Malad. For

29:57

more, visit rapidresponseshow.com. Want

30:07

advice from some of the top minds in business?

30:10

Whether you're an entrepreneur just getting started or

30:12

facing an uncertain fork in the road, Masters

30:15

of Scale wants to help. Send

30:17

us your questions and we'll get the legendary leaders who

30:19

come on our show to offer you advice.

30:22

Send in questions by emailing hello at

30:24

masters of scale.com or call

30:26

us at 919-627-8377 to

30:31

leave a message. That's 919 Masters.

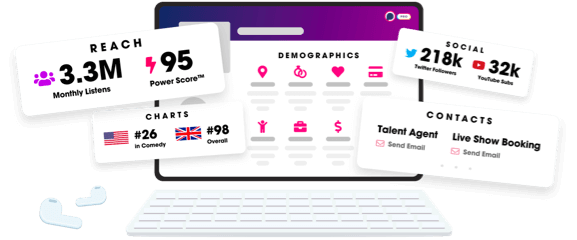

Unlock more with Podchaser Pro

- Audience Insights

- Contact Information

- Demographics

- Charts

- Sponsor History

- and More!

- Account

- Register

- Log In

- Find Friends

- Resources

- Help Center

- Blog

- API

Podchaser is the ultimate destination for podcast data, search, and discovery. Learn More

- © 2024 Podchaser, Inc.

- Privacy Policy

- Terms of Service

- Contact Us